Yesterday, I reviewed one of the papers talking about GFS(Google File System) which I can’t really understand when I attend the Computer System course in my graduate program. With my work experience after graduation, this time when I looked back to this paper, I am happy that I start understanding what is going on with Distributed File System and the scheme behind it. So I will write down the summary about this time of review.

Comparison of two architectures

For a distributed system, we can consider it means we have to use a group of machines to store and process data.

So to make sure the well coordination, we have to design how machines communicate to each other.

Normally we have two ways to design the distributed pattern. One is called Peer to Peer.

The other is called Master Slave.

Peer to Peer

According to the name, the scheme indicates a cluster of machines with the same level.

- Advantage: If one of the machine is down, it won’t affect the whole system

- Disadvantage: Multiple machines should communicate with each other to make the data consistent, which is complex in implementation

Master Slave

This scheme indicates a cluster of machines with different levels, i.e master and slave.

- Advantage: The implementation is intuitive and simple and it is easy to keep the data consistent. Normally the

master machine will have a so-called

write ahead log. Slave machines can read from master machine to sync the most recent changes. - Disadvantage: If the master machine is down, the whole system is down as well.

How the storage works

A file system is used for operation of read and write files. It is necessary for use to know how files are stored in disk.

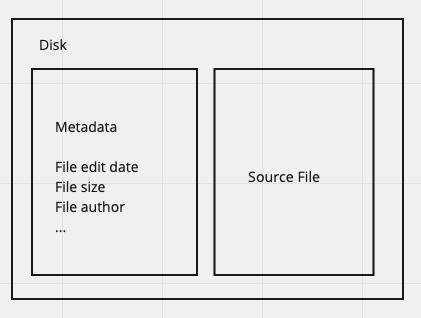

A file system need to store metadata of file(date, size, author) and source file to the disk. Thinking about when we look through files in Finder, we can see the date, size of files easily without clicking the file. So the frequency of accessing to metadata is much higher than that of a source file. Based on this point and the hardware structure of disk, a file system normally put the metadata together to save the tracking time. Otherwise if metadata is separated(saved ahead of their own source files), it will take more time to load the metadata in Finder, which is bad user experience for a file system.

After talking about how metadata is stored, we switch to source file storage. Say if we have a 100G file to store, we can use a single machine file system to hold this file. For the storage in a single machine, the file itself is actually sharded into small pieces and stored into small blocks in disk. Each block normally can save 4kb data. The blocks are stacked in disk. A good way to store those small pieces of the file is saving them separately into blocks, which is good for modification. If we continuously store those small pieces of the file into blocks, when the file length increases, we have to move the other files’ blocks forward to insert the modification to make sure blocks of the same file stay together.

When the file size increases to 100T, one issue will occur, i.e too many blocks to save this file. Now we

consider increasing the size of blocks, say 64mb each. Now we call the block as chunk. The advantage of chunk is

reducing the size of metadata because the number of chunks is smaller than that of blocks. However, an inevitable

disadvantage of chunk is effective for small files. Thus GFS has the assumption that it is for large file storage

Combination of Master Slave and Chunk Usage

GFS is a combination of Master Slave architecture and chunk usage. Although Master Slave has the disadvantage of master failure, it’s fine for a file system to be down for a few minutes or so to restart the master server since a file system is not time sensitive. When the file size increase to 100P, we have to use a cluster of machines to hold the file. The architecture of GFS is one master machine plus multiple chunk servers(slave). Master stores the metadata, meaning which part of a file is stored to which chunk server. Instead of saving the offset(the chunk number in chunk server holding file pieces) in the master machine, the offset will be stored as metadata in the corresponding chunk servers. This will reduce the size of metadata in the master bringing less pressure for master. Apart from this advantage, it will also reduce the traffic between master and chunk server since they don’t need to communicate with each other about offset metadata. After knowing the location of chunk server from master, the chunk server can fetch the file pieces using the offset in their own.

Write

For a distributed file system, it’s better to write a file in separated pieces. Since the file is large, if the file is written in a failure, rewriting the whole file is a waste of time. Therefore, writing separately is good for rewriting the failure parts. Since the chunk size is 64mb normally, we also shard the large file into 64mb each to increase the space efficiency. When a write operation happens, master will assign chunk server and record the chunk list as metadata.

For modification, in a distributed file system, it’s hard to control the chunk changes and metadata re-allocation (if the file becomes larger or smaller). Thus, we can directly delete the previous file and rewrite the file piece by piece again.

Read

When a read operation happens, we first access master machine to get the chunk list. According to the chunk list, we can locate the pieces and then fetch data from those chunks.

Failure and Recovery

From the previous section, we know the system will be down if the master machine is down. But normally one master machine is enough for most scenarios. We can reboot machine if the master machine is down and it may only take a few minutes. Following is the most common Q&A for the failure and recovery of distributed system.

How to identify if chunk on the disk is broken?

We can use checksum method to check if data is broken. Checksum method is commonly used even for dependency check. We can use MD5, SHA256 and XOR operation etc as a checksum method.

How to avoid chunk data loss?

Replica. Normally we need 3 copies of data. Two copies are closed to each other for quick recovery. The other copy may locate far away from these two copies for security reason. For example, if there is a fire happens in the location of the two copies of data, we still have one copy in another location.

Key point here in replica is write operation. It is better not to write through master so that we can prevent unnecessary pressure for master and reduce the probability of master crash. We can get chunk server location from master and directly write into one of the chunk replica severs(lead replica) and then let lead replica sever sync data with the other two gradually. The lead replica selection depends on the distance as well as traffic between clients and servers. In this way, we can reduce the write pressure to the system at a time. Moreover, syncing inbound among chunk servers will be faster than the write operation between clients and servers.

How to find whether a chunk is down?

We can let chunk servers report to master periodically. If the master doesn’t get report from a chunk server for a long time. The master will consider the chunk server is down and will try reboot and recovery operation.